Web LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM Suitable examples of GPUs for this. How much RAM is needed for llama-2 70b 32k context. Web - llama-2-13b-chatggmlv3q4_0bin CPU only 381 tokens per second - llama-2-13b-chatggmlv3q8_0bin CPU only. Opt for a machine with a high-end GPU like NVIDIAs latest RTX 3090 or RTX 4090 or dual GPU setup to accommodate the. Web The size of Llama 2 70B fp16 is around 130GB so no you cant run Llama 2 70B fp16 with 2 x 24GB You need 2 x 80GB GPU or 4 x 48GB GPU or..

WEB Chat with Llama 2 70B Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your. . WEB Were unlocking the power of these large language models Our latest version of Llama Llama 2 is now accessible to individuals creators researchers and businesses so they can experiment. WEB For an example usage of how to integrate LlamaIndex with Llama 2 see here We also published a completed demo app showing how to use LlamaIndex to chat with Llama 2 about live data via the. WEB Learn how to effectively use Llama 2 models for prompt engineering with our free course on DeeplearningAI where youll learn best practices and interact with the models through a simple API..

WEB You can access Llama 2 models for MaaS using Microsofts Azure AI Studio Select the Llama 2 model appropriate for your application from the model catalog and deploy the model using the PayGo. WEB How we can get the access of llama 2 API key I want to use llama 2 model in my application but doesnt know where I can get API key which i can use in my application I know we can host model. WEB Getting started with Llama-2 This manual offers guidance and tools to assist in setting up Llama covering access to the model hosting instructional guides and integration. WEB Connecting to the Llama 2 API To connect to the Llama 2 API you need to follow these steps Before you start make sure you have A Meta account with access to the Llama 2. WEB How to Access and Use LLaMA 2 Given its open-source nature there are numerous ways to interact with LLaMA 2 Here are just a few of the easiest ways to access and begin..

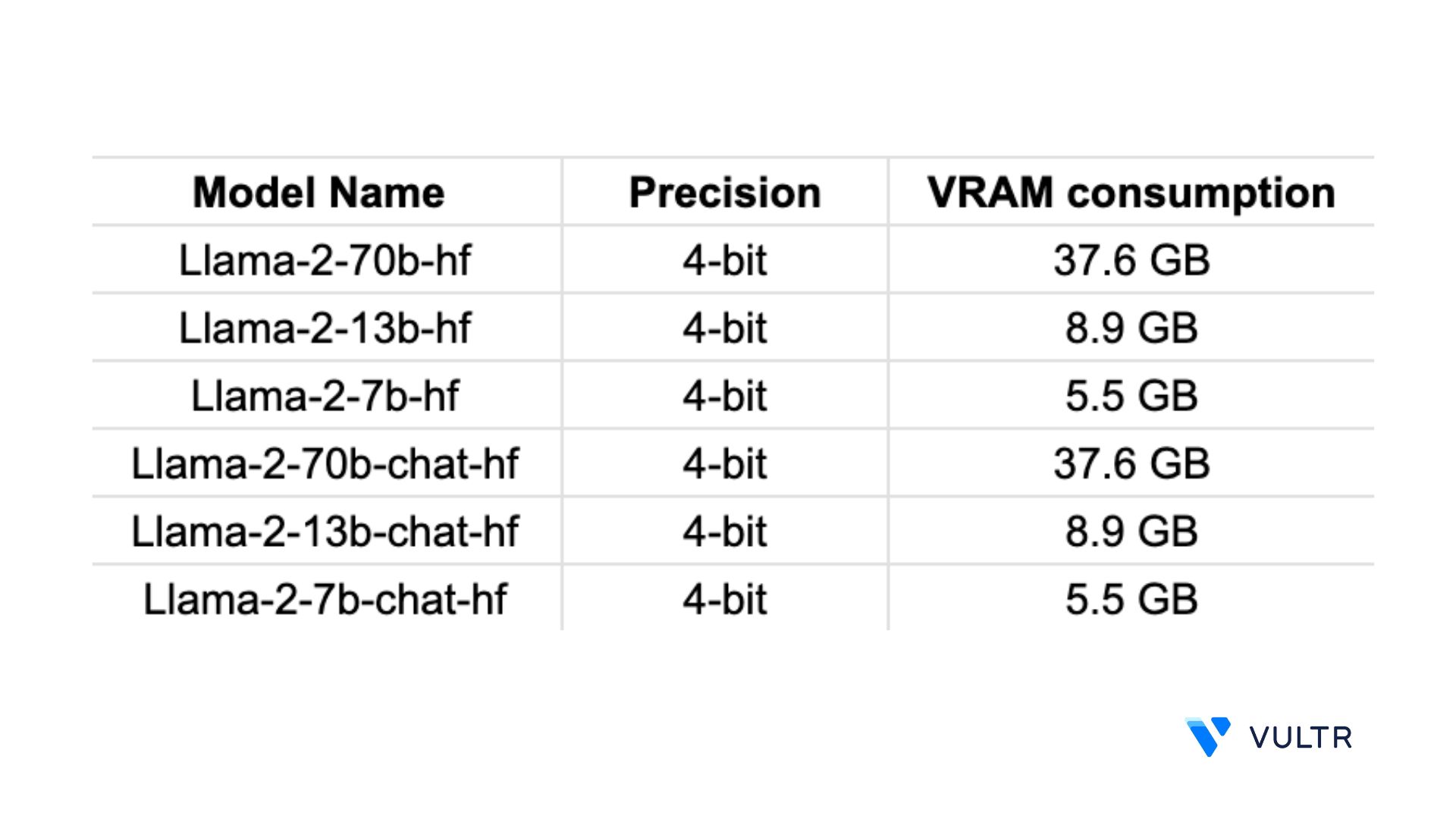

Learn how to effectively use Llama 2 models for prompt engineering with our free course on DeeplearningAI where youll learn best practices and interact with. Usage tips The Llama2 models were trained using bfloat16 but the original inference uses float16 The checkpoints uploaded on the Hub use torch_dtype float16 which will be used by the. Kaggle Kaggle is a community for data scientists and ML engineers offering datasets and trained ML models Weve partnered with Kaggle to integrate Llama 2. We have a broad range of supporters around the world who believe in our open approach to todays AI companies that have given early feedback and are excited to build with Llama 2 cloud. Technical specifications Llama 2 was pretrained on publicly available online data sources The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million..

Komentar